Jonathan Roberts

Cambridge, UK

I am a PhD student based in the Machine Intelligence Laboratory at the University of Cambridge, supervised by Samuel Albanie (Google DeepMind), Kai Han (The University of Hong Kong), and Emily Shuckburgh (University of Cambridge). I am part of the Application of Artificial Intelligence for Environmental Risk (AI4ER) CDT.

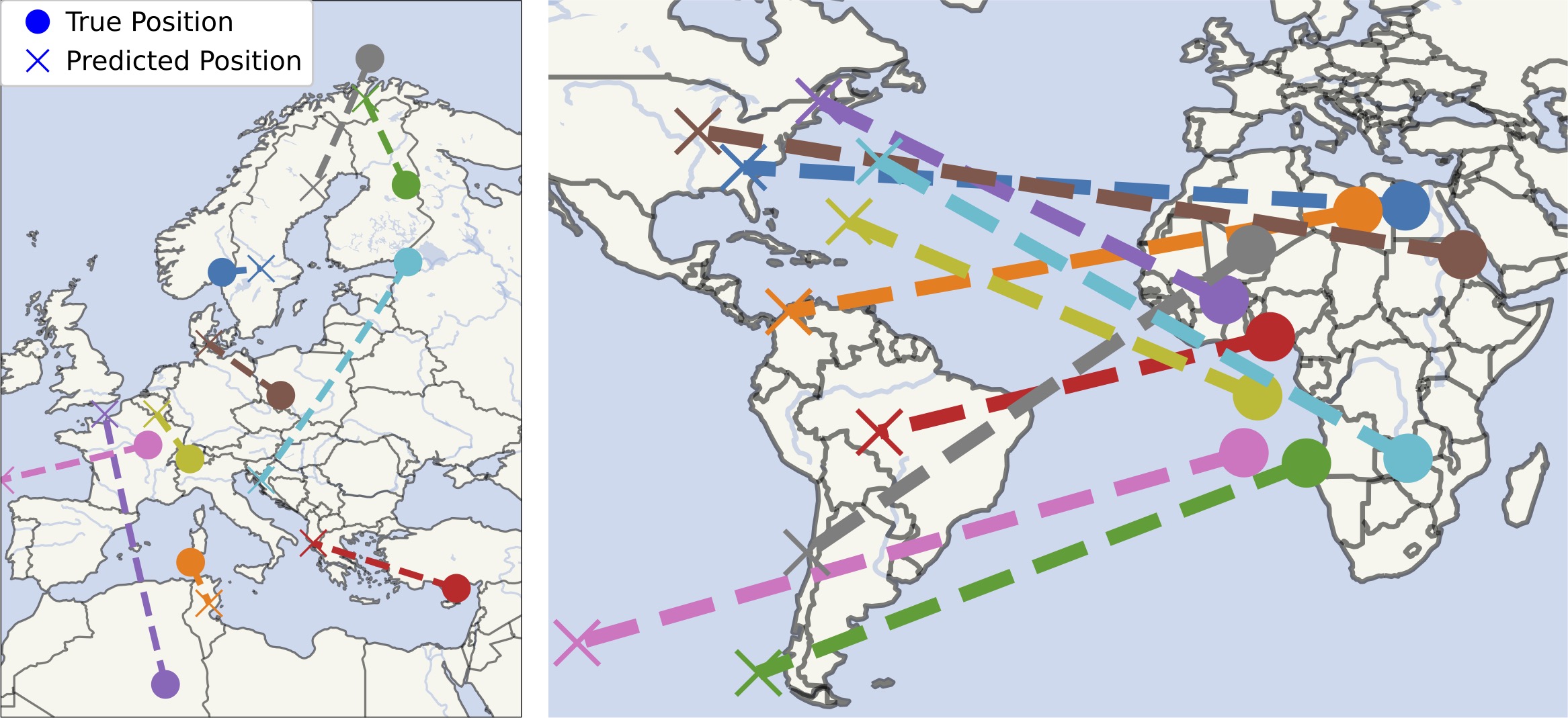

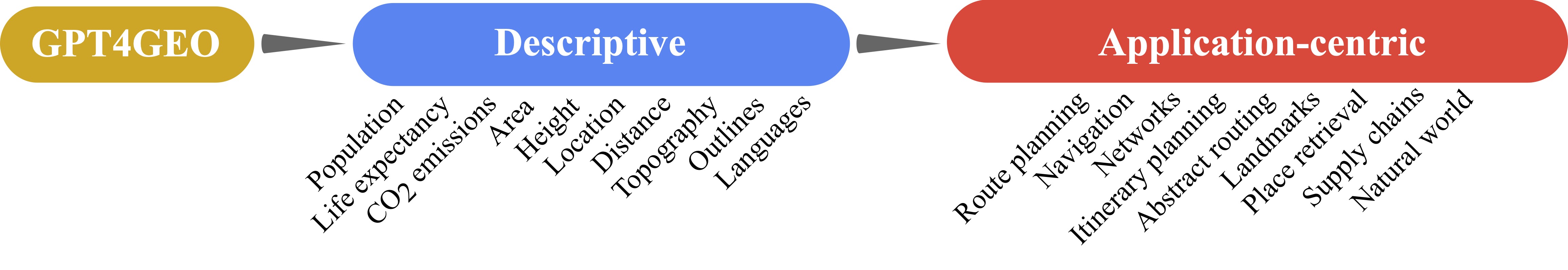

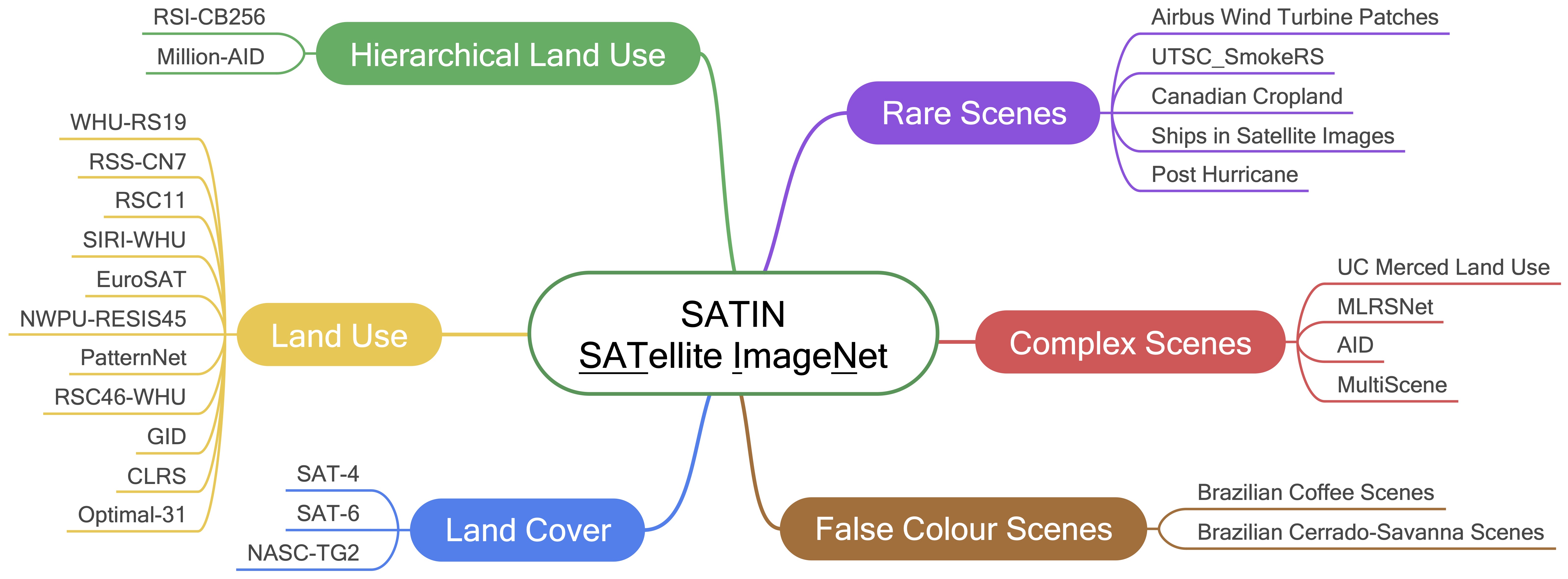

My research interests include evaluating, benchmarking and understanding the behaviour and capabilities of vision-language models, large language models and large multimodal models. I am particularly interested in the application of these models to the scientific and geospatial domains as well as long-context settings.

Previously, I completed an MRes in Environmental Data Science at the University of Cambridge. Before this, I worked as a Systems Engineer in the aerospace industry. I initially completed a Master of Physics (BSc MPhys) at the University of Warwick, supervised by Don Pollacco and Marco Polin.

My research interests include evaluating, benchmarking and understanding the behaviour and capabilities of vision-language models, large language models and large multimodal models. I am particularly interested in the application of these models to the scientific and geospatial domains as well as long-context settings.

Previously, I completed an MRes in Environmental Data Science at the University of Cambridge. Before this, I worked as a Systems Engineer in the aerospace industry. I initially completed a Master of Physics (BSc MPhys) at the University of Warwick, supervised by Don Pollacco and Marco Polin.